Abstract

Out-of-distribution (OOD) detection is crucial for the safe deployment of neural networks. Existing CLIP-based approaches perform OOD detection by devising novel scoring functions or sophisticated fine-tuning methods. In this work, we propose SeTAR, a novel, training-free OOD detection method that leverages selective low-rank approximation of weight matrices in vision-language and vision-only models. SeTAR enhances OOD detection via post-hoc modification of the model's weight matrices using a simple greedy search algorithm. Based on SeTAR, we further propose SeTAR+FT, a fine-tuning extension optimizing model performance for OOD detection tasks. Extensive evaluations on ImageNet1K and Pascal-VOC benchmarks show SeTAR's superior performance, reducing the relatively false positive rate by up to 18.95% and 36.80% compared to zero-shot and fine-tuning baselines. Ablation studies further validate SeTAR's effectiveness, robustness, and generalizability across different model backbones. Our work offers a scalable, efficient solution for OOD detection, setting a new state-of-the-art in this area.

Method

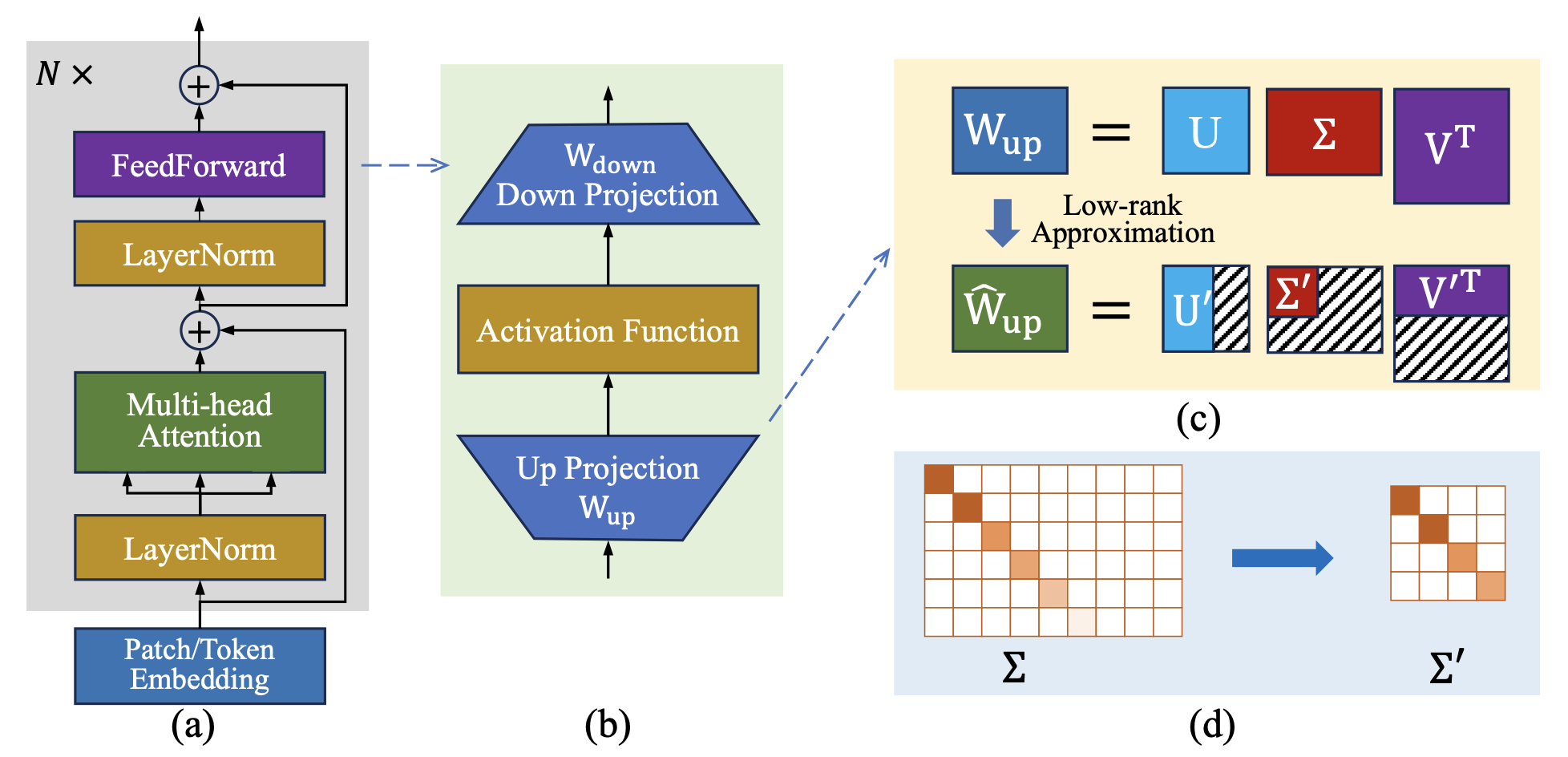

The overview of SeTAR

We proposed SeTAR, a simple yet effective out-of-distribution (OOD) detection method based on selective low-rank approximation. This model is training-free, relying on a post-hoc modification of the model’s weight matrices. SeTAR enhances OOD detection across a variety of scoring functions and model backbones, seamlessly integrating with existing zero-shot OOD detection methods.

SeTAR achieves its selective low-rank approximation by applying Singular Value Decomposition (SVD) to the model’s weight matrices. Using SVD, each weight matrix is decomposed into a set of singular values and corresponding vectors. SeTAR selectively keeps only the principal singular components, which hold the most significant information, while discarding minor singular components. This approach improves robustness by reducing sensitivity to noise in less impactful weight matrix elements.

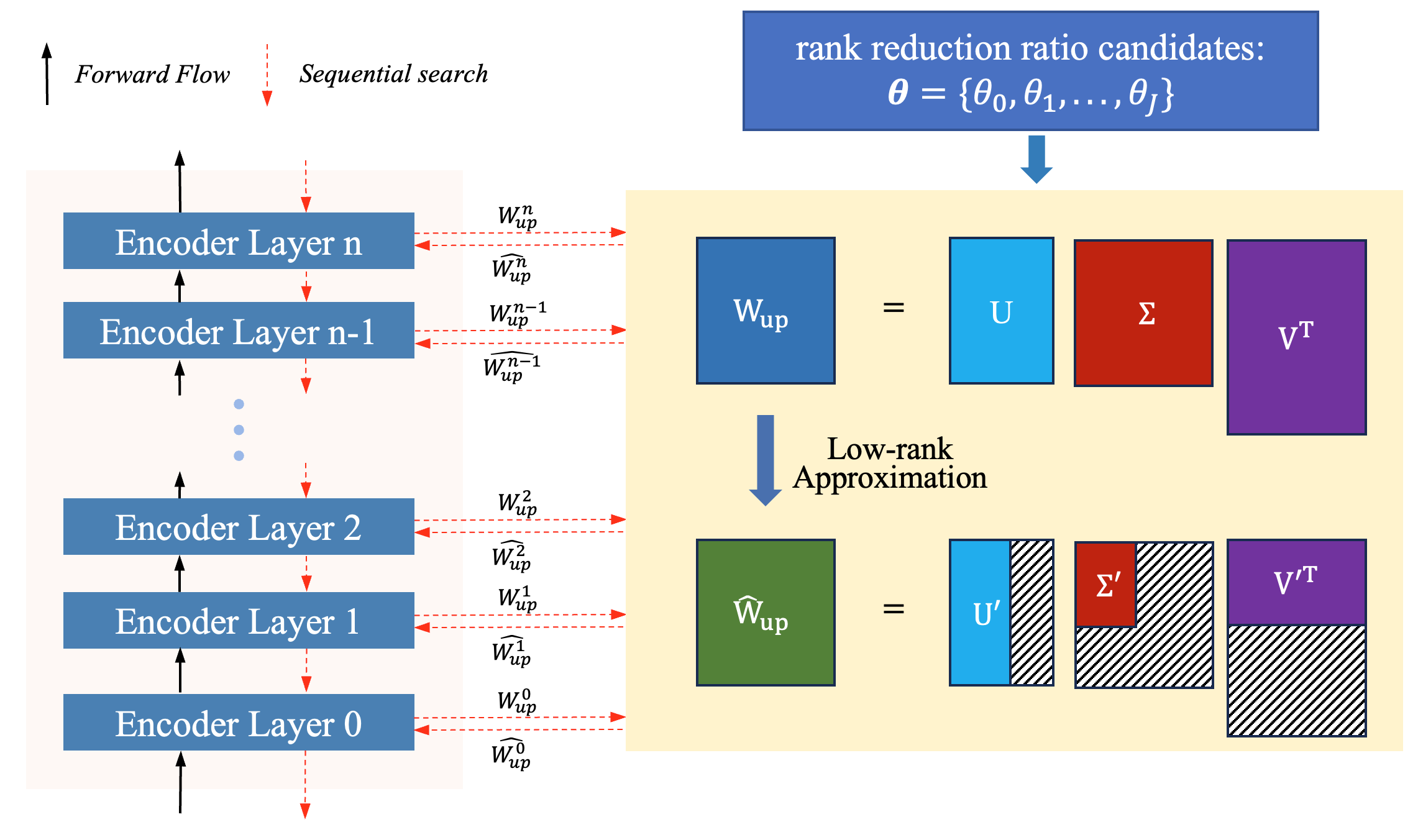

The SeTAR framework incorporates a top-to-bottom, image-to-text greedy search algorithm to optimize the rank reduction across the weight matrices in the model. This search method identifies the most effective layers and ranks to approximate, ensuring a fine balance between computational efficiency and detection performance. By searching optimal low-rank configurations on In-Domain images, SeTAR achieves improved OOD detection without retraining the model.

Greedy Search Algorithm

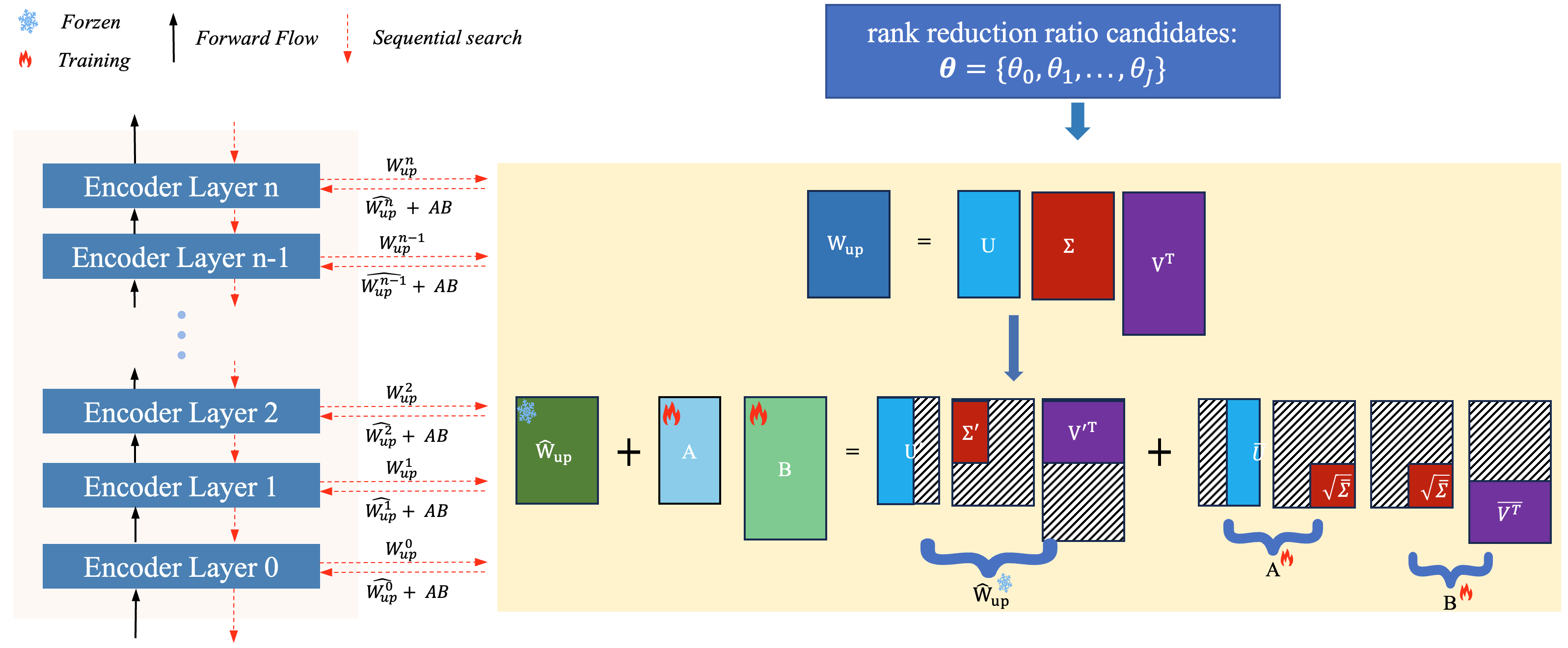

Building on SeTAR, we introduce SeTAR+FT, which leverages fine-tuning by freezing the major components while selectively tuning the minor components of the weight matrices. This process allows SeTAR+FT to enhance performance in fine-tuning-based OOD detection tasks, achieving state-of-the-art results on multiple benchmarks.

SeTAR+FT: Finetuning Extension of SeTAR

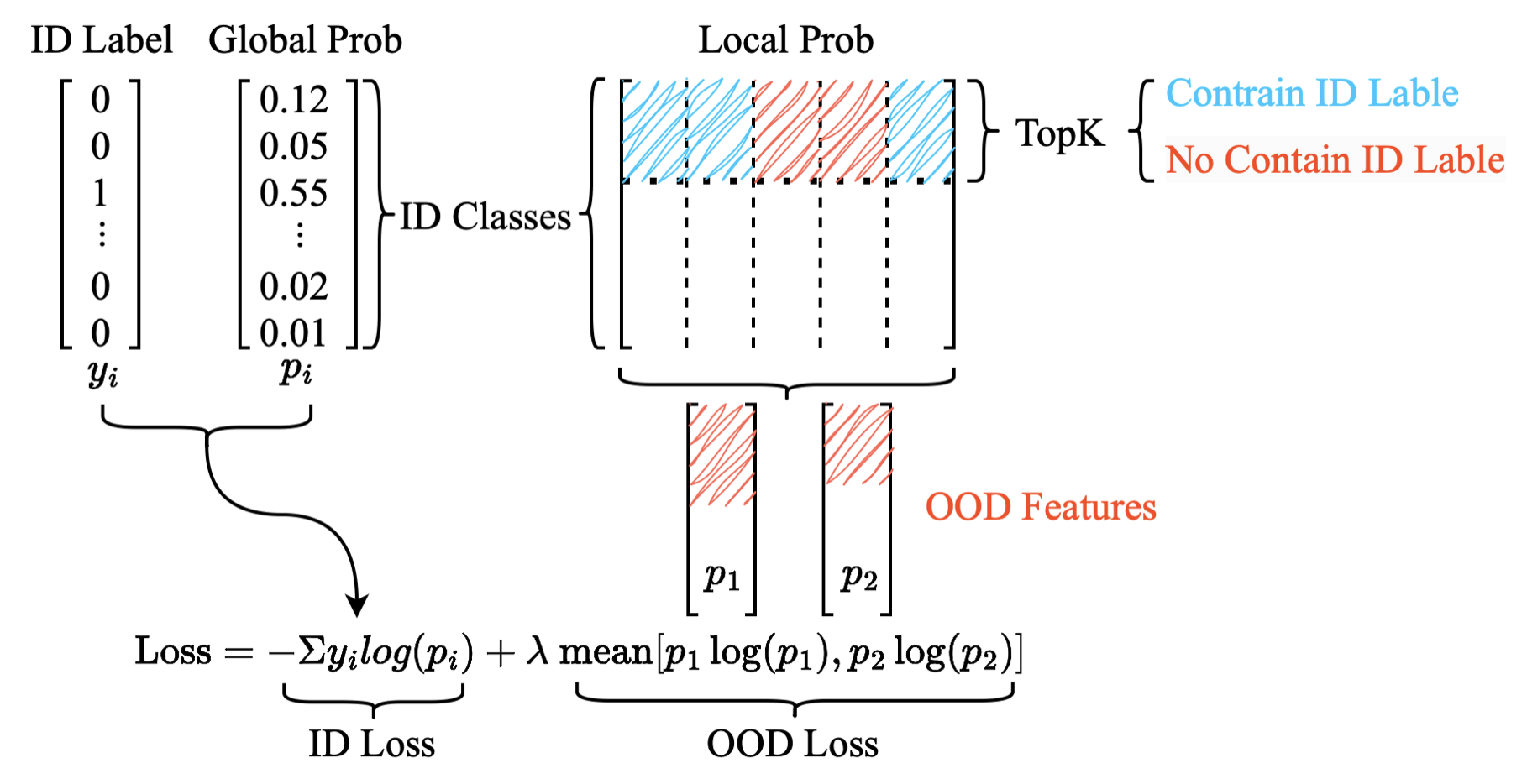

Loss Function: The SeTAR framework utilizes a combined loss function designed to enhance OOD detection without requiring OOD training data. This loss function consists of two components: the ID loss and the OOD loss. The ID loss is calculated using in-distribution (ID) data labels and their corresponding predicted probabilities, ensuring accurate classification within known classes. The OOD loss, on the other hand, is derived from pseudo-OOD features generated by leveraging local probabilities of non-ID classes. A top-K selection is applied to identify features that do not correspond to any ID label, which are then encouraged to form a distinct distribution away from ID classes. The overall loss is computed as:

Loss = -Σ yi log(pi) + λ mean [Σ pood_j log(pood_j)], where the first term represents the ID loss and the second term, weighted by a hyperparameter λ, represents the OOD loss. This formulation allows SeTAR to be highly effective in OOD detection while being trained exclusively on ID data.

Loss Function

Results

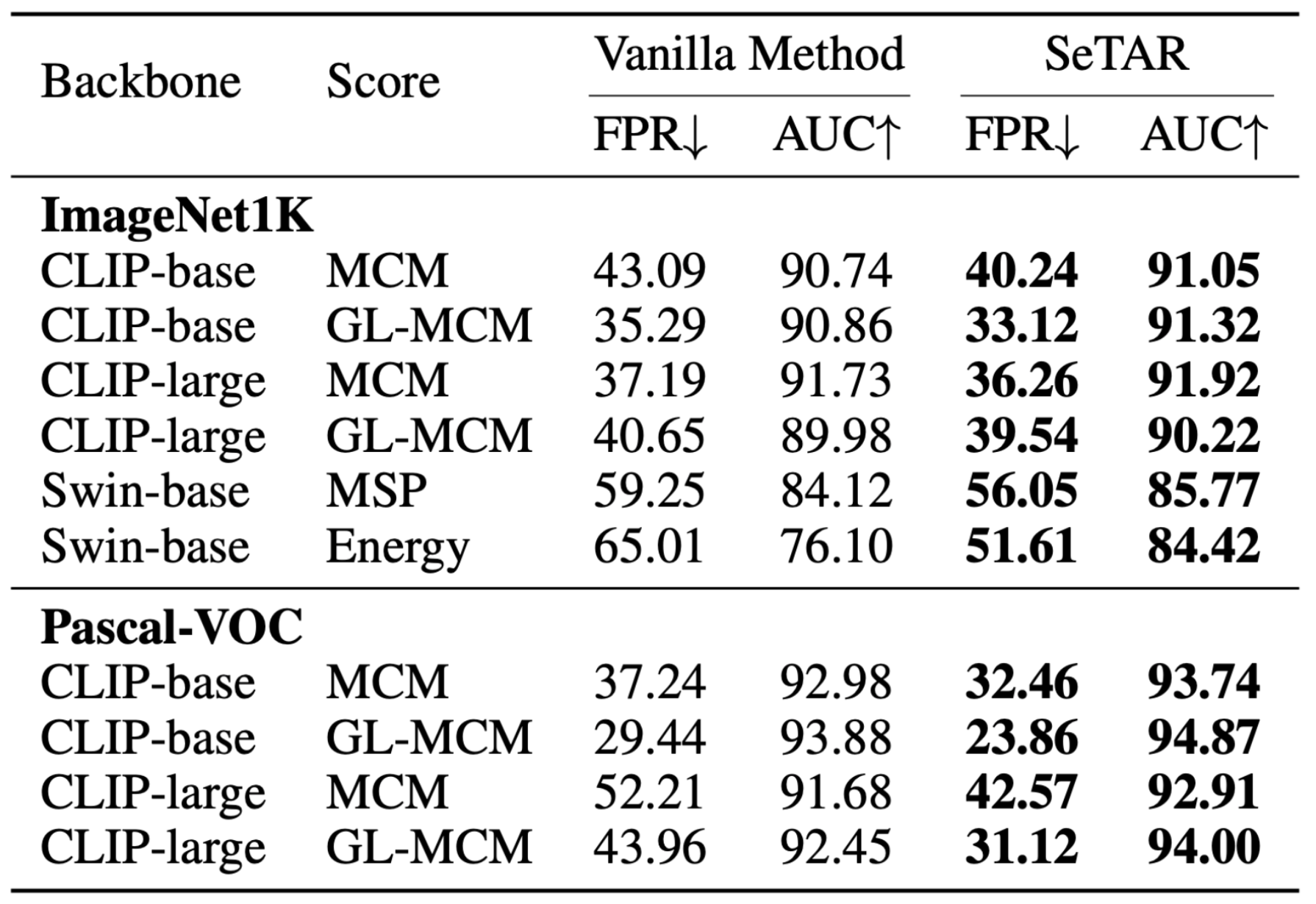

Training-Free Results: SeTAR achieves state-of-the-art out-of-distribution (OOD) detection performance in training-free, zero-shot settings. On both ImageNet1K (with iNaturalist, SUN, Places, Texture as OOD) and Pascal-VOC (with iNaturalist, SUN, Places, Texture, ImageNet22K, COCO as OOD) benchmarks, SeTAR significantly reduces the False Positive Rate at 95% True Positive Rate (FPR95) and increases the Area Under the Receiver Operating Characteristic (AUROC) compared to the vanilla methods. SeTAR is effective across different backbones (CLIP-base, CLIP-large, Swin-base) and various score functions (MCM, GL-MCM, MSP, Energy).

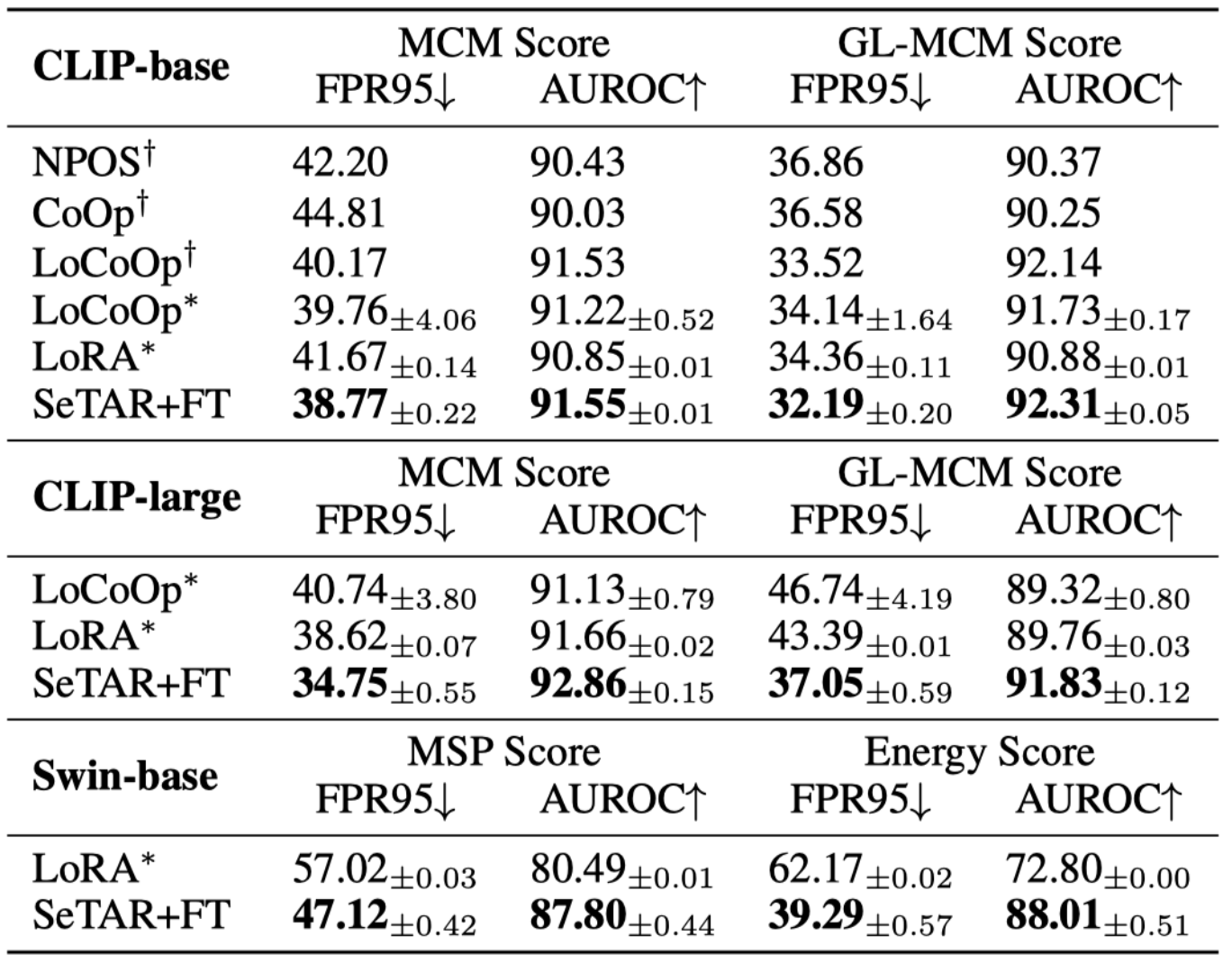

Fine-Tuning Results: SeTAR+FT, which integrates fine-tuning with selective low-rank approximation, outperforms existing fine-tuning methods like LoCoOp and LoRA. On the ImageNet1K benchmark, We observe that SeTAR+FT outperforms all baselines on both MCM and GL-MCM scoring functions. For example, with CLIP-base as the backbone, SeTAR+FT achieves a relatively average FPR95 reduction of 3.97% and 6.67% compared to LoCoOp and LoRA. Moreover, when scaled up to CLIP-large, SeTAR+FT outperforms LoCoOp and LoRA by relatively 17.92% and 12.45% FPR95 on the same benchmark. Similar results are observed on Swin Transformer, where SeTAR+FT outperforms LoRA by relatively 17.36% and 36.80% FPR95 on MSP and Energy scoring functions, respectively.These results highlight SeTAR+FT's effectiveness in achieving new state-of-the-art OOD detection performance in fine-tuning-based settings.

Training-Free Results on ImageNet1K and Pascal-VOC

Fine-tuning Results on ImageNet1K

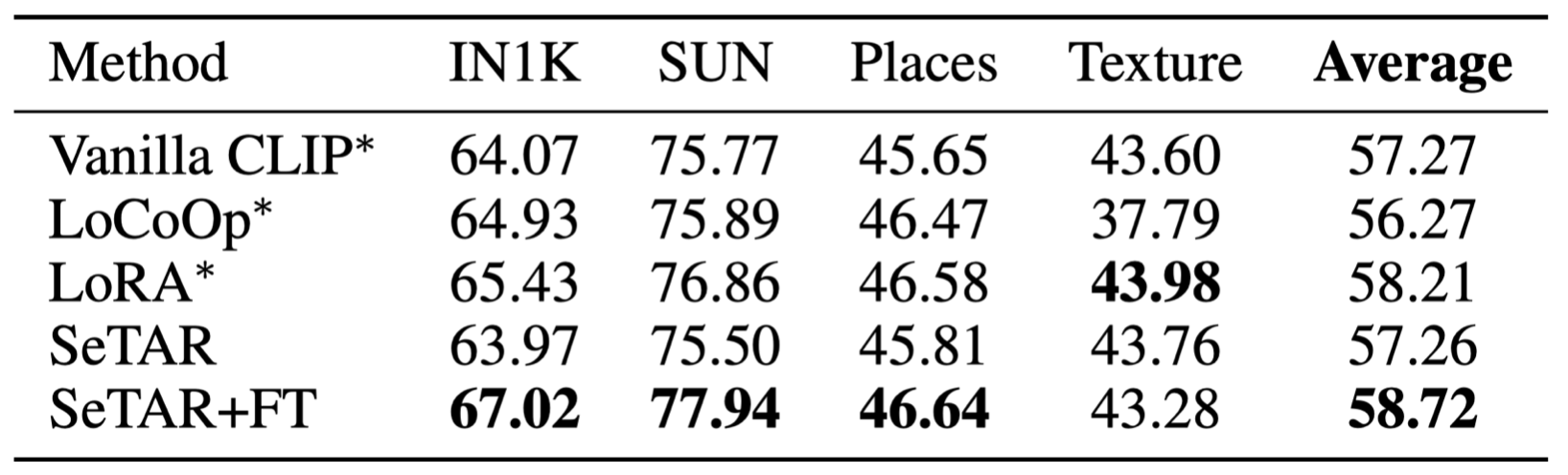

Image Classification Accuracy: The SeTAR+FT model achieves the highest accuracy across both in-distribution (IN1K) and out-of-distribution datasets (SUN, Places, Texture), with an average accuracy of 58.72%. Compared to other methods like Vanilla CLIP, LoCoOp, and LoRA, SeTAR+FT shows significant improvements, especially on IN1K and SUN, confirming its effectiveness in maintaining classification performance while enhancing OOD detection capabilities.

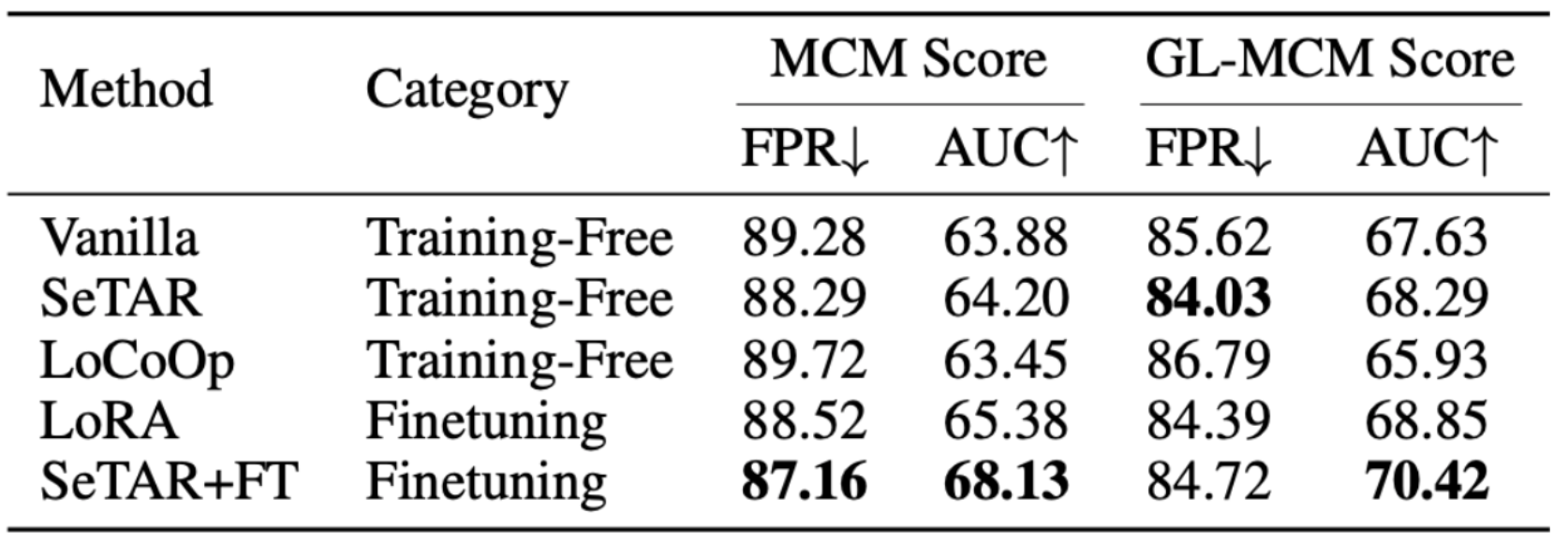

Near-OOD Results: In near-OOD detection (ImageNet1K as ID and SSB-Hard as OOD.), SeTAR+FT demonstrates superior performance, achieving the lowest FPR and highest AUROC across both MCM and GL-MCM scoring functions. With FPR95 reduced to 87.16 and AUROC increased to 70.42 for GL-MCM, SeTAR+FT outperforms both training-free and fine-tuning-based baselines, including LoCoOp and LoRA. These results highlight SeTAR+FT's robustness and efficiency in detecting near-OOD samples in fine-tuning-based settings.

Image Classification Accuracy

Near-OOD Results

Ablations

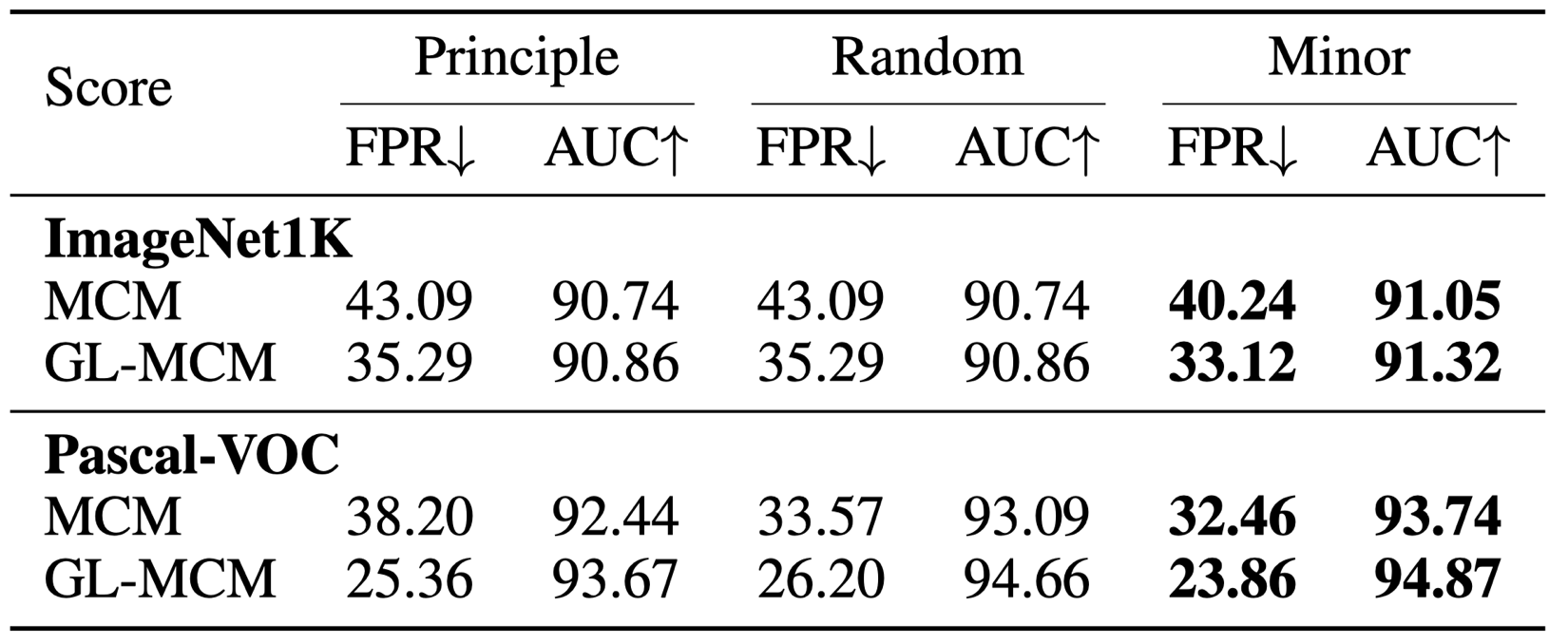

Pruning Strategies: The selective pruning of minor singular components in SeTAR yields the best results, reducing FPR and increasing AUROC across both ImageNet1K and Pascal-VOC benchmarks. In comparison, retaining principle components or using random pruning leads to higher FPR and lower AUROC, indicating that preserving critical components while discarding minor ones is optimal for enhancing OOD detection. For instance, in Pascal-VOC with the GL-MCM score, SeTAR's minor component pruning achieves an FPR of 23.86 and AUROC of 94.87, outperforming other strategies.

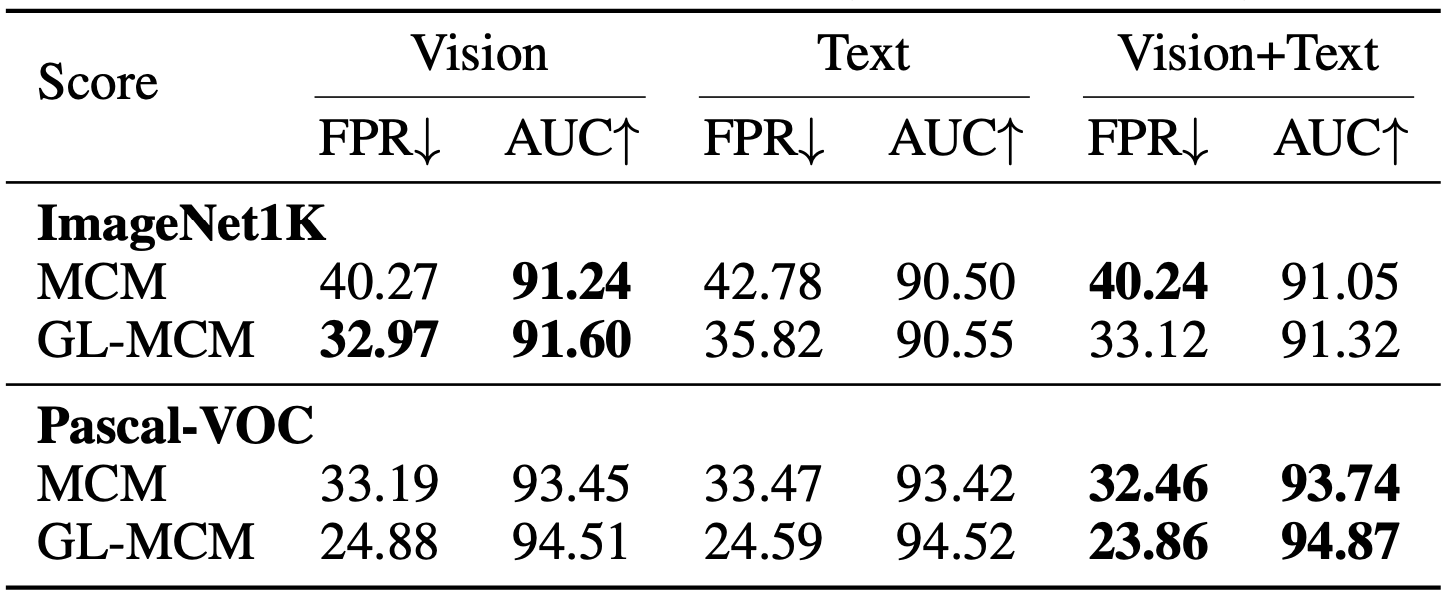

Modality Impact: Utilizing both vision and text modalities (Vision+Text) consistently enhances OOD detection performance over using a single modality. In both ImageNet1K and Pascal-VOC benchmarks, combining Vision+Text results in the lowest FPR and highest AUROC, demonstrating the benefit of multi-modal integration. Specifically, for Pascal-VOC with the GL-MCM score, combining vision and text achieves an FPR of 23.86 and AUROC of 94.87, indicating a strong synergy between these modalities for robust OOD detection.

Pruning Strategies

Modality Impact